By: Datuk Ir. Ts. Br. Dr. Amarjit Singh

BEng(Hons)(Civil), MSc(Water), PhD(Eng)(Education), PEPC, PTech, MIEM, MWA, ACPE, AVS, AAE, CCPM, MACEM

Perunding WENCO Sdn. Bhd.

Abstract

The Institution of Engineers Malaysia (IEM) and the Board of Engineers Malaysia (BEM) stressed the need for Malaysian engineering graduates to be able to assimilate sustainable development knowledge, skills and values in their professional practice. To accomplish the attributes of engineering graduates of the 21st century, engineering education has to match the desired strategies that can produce the desired quality of graduates. The purpose of the study is to develop and validate an engineering employability skills framework for Malaysian engineering graduates, which are built up upon the Malaysia Engineering Employability Skills (MEES) model developed by Zaharim, Yusoff, Omar and Mohamed (2009). The objectives are to elucidate the most theoretically and statistically accurate factor structure of the previously developed MEES model using Structural Equation Model (SEM) based on pertinent stakeholders’ perceptions on essential skills required and current needs in engineering fields. The survey response analysis was carried out and confirmatory factor analysis (CFA) establishes measurement validity and reliability and demonstrates AMOS analysis and hypotheses testing.

Keywords: Malaysia Engineering Employability Skills (MEES), Structural Equation Model (SEM), Engineering Graduates, Engineering Education, Confirmatory Factor Analysis (CFA)

1.0 Introduction

The principle of this quantitative study was to explicate the most theoretically and statistically precise factor structures of the previously developed MEES model using Structural Equation Model (SEM). The findings were assessed for its content validity and extended as a yardstick for the soft skills developed and observed importance by employers in engineering industries. The Malaysian Engineering Employability Skills (MEES) is the most comprehensive framework developed by a group of researchers (Zaharim et al, 2009), However since its development in 2009, there is no research conducted to inspect if this inclusive model of engineering employability skills is relevant to assess the current employability skills of undergraduate engineering students. It is interesting to examine if the hypothesized factor structures of the model which was developed using exploratory factor analysis still hold true to benchmark the soft skills acquired by current engineering undergraduates, graduates and unemployed graduates. Employability skills are not a new concept. Engineering employability skills, also been called as generic skills and soft skills, are highly related to non-technical skills or abilities. The skills are transferable (Yorke, 2006) and applicable from one place to another. Most of the countries developed frameworks on engineering employability skills as a guide for engineering employers and employees as well for graduates.

The determination of this study was to scrutinize the level of consensus among 100 Malaysian engineering employees in the field of engineering regarding the communication, teamwork, knowledge, competence in application and practical problem solving, professionalism, leadership, responsibility, decision making, interdependence, innovative, technical element and information technology skills among engineering graduates, specifically in the Malaysian context using the Confirmatory Factor Analysis. The following was the research question: What are the most logical factor structures of engineering employability skills using Structural Equation Modelling (SEM) based on employers’ ratings of employability skills?

2.0 Literature Review

An ultimate and ideal situation for both the institution of higher learning and the industry is to go in perfect synchronisation (Ezhilan, 2017). There is a persistent conflict over the requirements of the industry for engineering graduates with the tangible competencies they have acquired during their academic pursuit (Lowden, Hall, Elliot & Lewin, 2011; Willian, 2015). Many studies have been reported in this vicinity from Malaysia (examples, Ahmed Umar Rufai, Ab Rahim Bin Bakar & Abdullah Bin Mat Rashid, 2015; Mohd Hazwan Mohd Puad, 2015; Mohamad Zuber Abd Majid, Muhammad Hussin, Muhammad Helmi Norman & Saraswathy Kasavan, 2020) and other countries (examples, Ezilan, 2017; Kaushal, 2017; Sushila Shekhawat, 2020; de Campos, de Resende & Fagundes, 2020). The vast array of research works on engineering employability skills indicates the importance of identifying, defining and investigating the sustainability of the soft skills that have an impact on the employability in the Engineering fields, thus filling the gap between education and job market.

The present investigation is designed to develop and validate an engineering employability skills framework to enhance engineering graduates’ employability skills.

3.0 Methodology

A confirmatory factor analysis (CFA) was conducted for measure validity and reliability of hypothesised measurement model before evaluating the theoretical model as suggestion by Arbuckle, J.L (2010). The CFA allows testing the hypothesis that a relationship between observed variables and its underlying latent constructs exists, Suhr (1999). Capmouteres and Anand (2016) defined the CFA is a tool that is used to approve or discard the measurement theory. In this study, factor analysis was performed on 14 items in order to know whether the items correlated with each other and the number of components involved. Confirmatory Factor Analysis (CFA) is also used to test the validity of the extracts and the reliability of the constructs of the indicators (items) forming latent constructs. The CFA used in this study is a second order confirmatory factor analysis (2nd Order CFA), a measurement model that consists of two levels. The first level of analysis is carried out from the latent construct of the dimension to its indicators and the second analysis is carried out from the latent construct to its dimension construct. This study aims to test the construct validity and reliability of construct employability from different constructs from previous studies.

3.1 Approach to CFA

After the questionnaires were administered, the second phase of the study was the application of the CFA. Based on the questions asked in the questionnaires, the main criteria and their ranking of importance were selected by 100 employees in different fields of engineering organisation such as contractors, consultants, private service industries, developers and government agencies as shown in Table 1.

The objectives are to elucidate the most theoretically and statistically accurate factor structure of the previously developed MEES model using Structural Equation Model (SEM) based on pertinent stakeholders’ perceptions on essential skills required and current needs in engineering fields. Given that purpose, this study employed the design and development research (DDR) approach and the study was implemented in three phases as recommended by Richey and Klein (2007): Phase 1: Identification of the problem, Phase 2: Design and development of the framework, and Phase 3: Content validity analysis. The respondents of this study are Malaysian employers involved in the engineering industry located in Sabah, Malaysia. The survey response analysis was carried out and confirmatory factor analysis (CFA) establishes measurement validity and reliability and demonstrates AMOS analysis and hypotheses testing.

Gender

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| Valid | Male | 75 | 76.5 | 76.5 | 76.5 |

| Female | 23 | 23.5 | 23.5 | 100 | |

| Total | 98 | 100 | 100 | ||

Age

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| Valid | 20 – 29 | 13 | 13.3 | 13.3 | 13.3 |

| 30 – 39 | 39 | 39.8 | 39.8 | 53.1 | |

| 40 – 49 | 27 | 27.6 | 27.6 | 80.6 | |

| 50 – 59 | 13 | 13.3 | 13.3 | 93.9 | |

| Above 60 | 6 | 6.1 | 6.1 | 100 | |

| Total | 98 | 100 | 100 | ||

Race

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| Valid | Malay | 28 | 28.6 | 28.9 | 28.9 |

| Chinese | 18 | 18.4 | 18.6 | 47.4 | |

| Indian | 13 | 13.3 | 13.4 | 60.8 | |

| Others | 38 | 38.8 | 39.2 | 100 | |

| Total | 97 | 99 | 100 | ||

| Missing | System | 1 | 1 | ||

| Total | 98 | 100 | |||

Highest Education

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| Valid | SPM | 6 | 6.1 | 6.1 | 6.1 |

| Certificate | 2 | 2 | 2 | 8.2 | |

| Diploma | 6 | 6.1 | 6.1 | 14.3 | |

| Degree | 69 | 70.4 | 70.4 | 84.7 | |

| Master | 15 | 15.3 | 15.3 | 100 | |

| Total | 98 | 100 | 100 | ||

Present Position

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| Valid | CEO/Managing Director/Director/Project Director | 24 | 24.5 | 24.5 | 24.5 |

| General Manager/Project Manager/Construction Manager/Technical Manager/Design Manager | 18 | 18.4 | 18.4 | 42.9 | |

| Design Engineer/Resident Engineer/Senior Engineer/Project Engineer/Engineer | 41 | 41.8 | 41.8 | 84.7 | |

| Human Resource Officer/Admin Officer | 5 | 5.1 | 5.1 | 89.8 | |

| Others | 10 | 10.2 | 10.2 | 100 | |

| Total | 98 | 100 | 100 | ||

Working Years

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| Valid | Less than 5 years | 14 | 14.3 | 14.3 | 14.3 |

| 5 – 15 years | 46 | 46.9 | 46.9 | 61.2 | |

| More than 15 years | 38 | 38.8 | 38.8 | 100 | |

| Total | 98 | 100 | 100 | ||

Engineering Industry

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| Valid | Construction | 34 | 34.7 | 34.7 | 34.7 |

| Consultant | 15 | 15.3 | 15.3 | 50 | |

| Government Agency | 33 | 33.7 | 33.7 | 83.7 | |

| Developer | 5 | 5.1 | 5.1 | 88.8 | |

| Private Service Industry | 11 | 11.2 | 11.2 | 100 | |

| Total | 98 | 100 | 100 | ||

Field of Engineering

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| Valid | Civil | 57 | 58.2 | 60 | 60 |

| Electrical/Electronic | 8 | 8.2 | 8.4 | 68.4 | |

| Mechanical | 13 | 13.3 | 13.7 | 82.1 | |

| Structural | 2 | 2 | 2.1 | 84.2 | |

| Chemical | 2 | 2 | 2.1 | 86.3 | |

| Admin/Human Resource | 7 | 7.1 | 7.4 | 93.7 | |

| Technical/Vocational | 1 | 1 | 1.1 | 94.7 | |

| Others | 5 | 5.1 | 5.3 | 100 | |

| Total | 95 | 96.9 | 100 | ||

| Missing | System | 3 | 3.1 | ||

| Total | 98 | 100 | |||

Table 1: Demographic of participating employees

Measure sampling for each variable, which are communication, teamwork, knowledge, problem solving, lifelong learning, competence, professionalism, leadership, responsibility, decision making, interdependence, innovative, technical element and information technology. The results show the Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy is equal to 0.876 for communication, 0.872 for teamwork, 0.876 for knowledge, 0.887 for problem solving, 0.856 for lifelong learning, 0.846 for competence, 0.872 for professionalism, 0.875 for leadership, 0.914 for responsibility, 0.850 for decision making, 0.879 for interdependence, 0.898 for innovative, 0.887 for technical element and 0.815 for information technology. Hair et al (2018) suggested that if the Kaiser-Meyer-Olkin (KMO) is greater than 0.6, the sampling is adequate to proceed with factor analysis. For the Bartlett’s Test of Sphericity (BTS) for all sampling was found to be significant (P = 0.000), which confirm that the inter correlation matrix contains sufficient variance to make all the factor analysis is valid. As a summary, the KMO test and BTS results has shown in Table 2 that the sampling was adequate to proceed with factor analysis.

| Skills | Kaiser – Meyer – Olkin of Sampling Adequacy | Bartlett’s Test of Sphericity (Approx.Chi -Square) | Bartlett’s Test of Sphericity (df) | Bartlett’s Test of Sphericity (Sig) |

| Communication | 0.876 | 365.978 | 10 | 0 |

| Teamwork | 0.872 | 369.61 | 10 | 0 |

| Knowledge | 0.873 | 435.528 | 10 | 0 |

| Problem Solving | 0.887 | 510.613 | 10 | 0 |

| Lifelong Learning | 0.856 | 599.419 | 10 | 0 |

| Competence | 0.846 | 419.288 | 10 | 0 |

| Professionalism | 0.872 | 511.076 | 10 | 0 |

| Leadership | 0.875 | 559.25 | 15 | 0 |

| Responsibility | 0.914 | 530.52 | 15 | 0 |

| Decision Making | 0.85 | 623.529 | 15 | 0 |

| Interdependence | 0.879 | 669.381 | 15 | 0 |

| Innovative | 0.898 | 503.24 | 10 | 0 |

| Technical Element | 0.887 | 556.894 | 10 | 0 |

| Information Technology | 0.815 | 400.449 | 10 | 0 |

Table 2: KMO and Bartlett’s Test for items group

Moreover, to support the sampling validity, factor loadings and communalities based on principal components analysis with varimax for 74 items were done. The 74 items were grouped into 14 factors namely communication, teamwork, knowledge, problem solving, lifelong learning, competence, professional, leadership, responsibility, decision making, interdependence, innovative, technical element and information technology.

The correspondence index for factor loading analysis validity has shown in Table 3. In this factor analysis, the communalities for all items are range from 0.464 to 0.919 which can be interpreted as the proportion of variance of each item that was explained by 14 factors which are namely communication, teamwork, knowledge, problem solving, lifelong learning, competence, professional, leadership, responsibility, decision making, interdependence, innovative, technical element and information technology. Principal components analysis was used to identify and compute all items into specific factors or components that should be 0.5 (50%) or better as suggested by Garson (2012).

| Construct | Communication | Construct | Teamwork | Construct | Knowledge |

| CS1 | 0.822 | TS1 | 0.753 | K1 | 0.869 |

| CS2 | 0.786 | TS2 | 0.827 | K2 | 0.822 |

| CS3 | 0.855 | TS3 | 0.828 | K3 | 0.816 |

| CS4 | 0.82 | TS4 | 0.861 | K4 | 0.827 |

| CS5 | 0.548 | TS5 | 0.464 | K5 | 0.689 |

| Construct | Problem Solving | Construct | Lifelong Learning | Construct | Competence |

| PS1 | 0.841 | LL1 | 0.883 | C1 | 0.842 |

| PS2 | 0.853 | LL2 | 0.859 | C2 | 0.855 |

| PS3 | 0.83 | LL3 | 0.895 | C3 | 0.694 |

| PS4 | 0.745 | LL4 | 0.888 | C4 | 0.743 |

| PS5 | 0.919 | LL5 | 0.841 | C5 | 0.8 |

| Construct | Professionalism | Construct | Leadership | Construct | Responsibility |

| P1 | 0.835 | L1 | 0.75 | R1 | 0.848 |

| P2 | 0.881 | L2 | 0.783 | R2 | 0.744 |

| P3 | 0.899 | L3 | 0.759 | R3 | 0.817 |

| P4 | 0.766 | L4 | 0.857 | R4 | 0.838 |

| P5 | 0.795 | L5 | 0.788 | R5 | 0.766 |

| P6 | 0.765 | L6 | 0.824 | R6 | 0.762 |

| Construct | Decision Making | Construct | Interdependence | Construct | Innovative |

| DM1 | 0.817 | IN1 | 0.76 | IS1 | 0.861 |

| DM2 | 0.846 | IN2 | 0.797 | IS2 | 0.882 |

| DM3 | 0.834 | IN3 | 0.915 | IS3 | 0.769 |

| DM4 | 0.821 | IN4 | 0.804 | IS4 | 0.88 |

| DM5 | 0.841 | IN5 | 0.845 | IS5 | 0.863 |

| DM6 | 0.742 | IN6 | 0.869 |

| Construct | Technical Element | Construct | Information Technology |

| TE1 | 0.846 | IT1 | 0.891 |

| TE2 | 0.912 | IT2 | 0.904 |

| TE3 | 0.886 | IT3 | 0.9 |

| TE4 | 0.896 | IT4 | 0.825 |

| TE5 | 0.814 | IT5 | 0.905 |

Table 3: Factor loading and communalities based on principle components analysis with varimax rotation for 74 items construct

Note: Factor loading ≥ 0.5 (50%)

Additionally, composite reliability was also calculated to determine the consistency of construct validity of sampling measure. The value of composite reliability in this study should be greater than 0.7 although in many cases suggested by Suprapto, W and Stefany, S, (2020), the value of 0.6 is also acceptable. In Table 4, it can be seen that the composite reliability value for communication variable is (0.599), followed by teamwork (0.578), knowledge (0.651), problem solving (0.704), lifelong learning (0.748), competence (0.616), professional (0.700), leadership (0.627), responsibility (0.653), decision making (0.638), interdependence (0.682), innovative (0.729), technical element (0.696) and information technology (0.604). Therefore, all variables are reliable as a measurement tool in this study because the composite reliability values are above the provisions of 0.7.

| Items | Constructs and Measurement | Construct validity |

| 1 | Communication | 0.599 |

| 2 | Teamwork | 0.578 |

| 3 | Knowledge | 0.651 |

| 4 | Problem Solving | 0.704 |

| 5 | Lifelong Learning | 0.748 |

| 6 | Competence | 0.616 |

| 7 | Professionalism | 0.7 |

| 8 | Leadership | 0.627 |

| 9 | Responsibility | 0.653 |

| 10 | Decision Making | 0.638 |

| 11 | Interdependence | 0.682 |

| 12 | Innovative | 0.729 |

| 13 | Technical Element | 0.696 |

| 14 | Information Technology | 0.604 |

Table 4: Composite reliability

Cut-off Value (>0.70)

Furthermore, Average Variance Extracted (AVE) was also measure which can support and better reflect the characteristics of each research variable in the model as suggested by Arbuckle, J.L., (2010). As suggested by Suprapto, W and Stefany, S, (2020), the minimum recommended Average Variance Extracted (AVE) value is 0.5, however the value of 0.4 is still acceptable. Table 5 shows the results of AVE. In Table 7, it indicates that the AVE value for communication variable is 0.7742, followed by teamwork (0.7606), knowledge (0.8068), problem solving (0.8394), lifelong learning (0.8650), competence (0.7852), professional (0.8367), leadership (0.7923), responsibility (0.8085), decision making (0.7987), interdependence (0.8259), innovative (0.8539), technical element (0.8343) and information technology (0.7778). Thus, the overall indicators for each variable are declared reliable as they have an AVE value greater than the minimum score of 0.5.

| Items | Constructs and Measurement | AVE |

| 1 | Communication | 0.7742 |

| 2 | Teamwork | 0.7606 |

| 3 | Knowledge | 0.8068 |

| 4 | Problem Solving | 0.8394 |

| 5 | Lifelong Learning | 0.865 |

| 6 | Competence | 0.7852 |

| 7 | Professionalism | 0.8367 |

| 8 | Leadership | 0.7923 |

| 9 | Responsibility | 0.8085 |

| 10 | Decision Making | 0.7987 |

| 11 | Interdependence | 0.8259 |

| 12 | Innovative | 0.8539 |

| 13 | Technical Element | 0.8343 |

| 14 | Information Technology | 0.7778 |

Table 5: Average variance extracted (AVE)

Cut-off Value (>0.50)

The CFA is employed to measure the relationships between a set of observed variables and a set of continuous latent variables based on theory or empirical research given the sample data (Byrne, 2012). The study conducted the CFA analyses in two steps. First, several CFAs were conducted separately to specify and estimate the measurement of those multidimensional constructs and their indicators to test the validity of the hypothesized measurement model by fitting it to the data. The analyses helped define the postulated relationships between the observed items and underlying factors (student attribute, knowledge, and student perceived employability skills). Then, an additional CFA was carried out to test the full measurement model with all latent variables included and tested simultaneously.

According to Hair et al. (2010), validity and reliability can be measured using: “Composite Reliability (CR), Average Variance Extracted (AVE), Maximum Shared Squared Variance (MSV) and Average Shared Squared Variance (ASV)”. To establish reliability, Hair et al. (2010) suggest that CR should be greater than 0.6 and preferably above 0.7. To establish convergent validity the AVE should be greater than 0.5 and CR is greater than the AVE, discriminant validity is supported if MSV is less than AVE and ASV is less than AVE. According to Tabachnick and Fidell (2007), convergent validity can be assessed using factor loading and AVE.

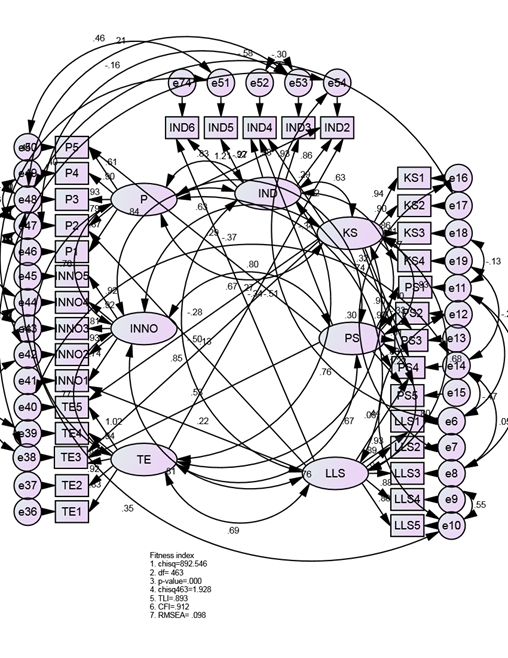

Therefore, after checking the goodness of fit indices in addition to validity and reliability, the final refined model results is suggesting to eliminate communication, teamwork, competence, leadership, responsibility, decision making and information technology from the sample. The refined model results are also suggesting removing KS5 and IND1 from the sample.

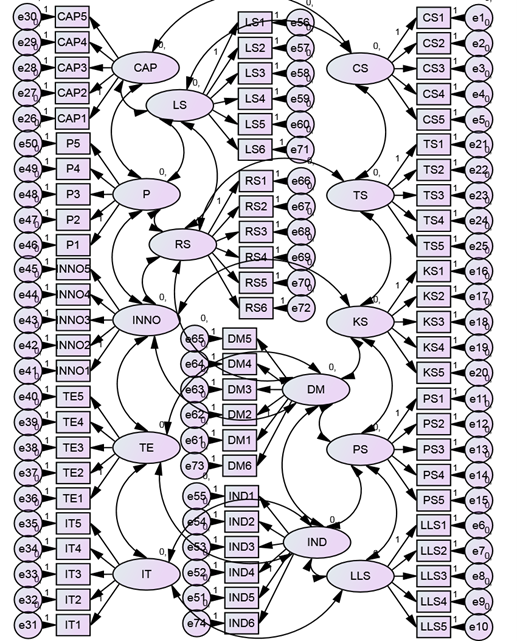

Figure 1: Validity construct

Confirmatory Factor Analysis (CFA) was employed to authorize how and to what extent the observed variables are linked to their underlying factors (Byrne, 2012). Initially, exploratory factor analysis (EFA) helps to identify the factor structure and numbers of underlying factors (Byrne, 2012). The Maximum Likelihood method was used to extract underlying factors. The direct Oblimin method was used for factor rotation in this study to ensure that the factors within each latent variable would be theoretically correlated (Meyers, Gamst, & Guarino, 2013). The CFA analyses of the seven key constructs (knowledge, problem solving, life-long learning, professionalism, interdependence, innovative and technical element) were conducted separately as is customary. The eigenvalue greater than 1.0 and the total explained variance were used to identify the number of factors (Kaiser, 1960). The factor loadings larger than 0.40 were considered as salient for the researcher to retain those items (Fabrigar et al., 1999). About 5% (n = 98) of the sample was used for the CFA. All the results supported the conceptualization of seven key constructs.

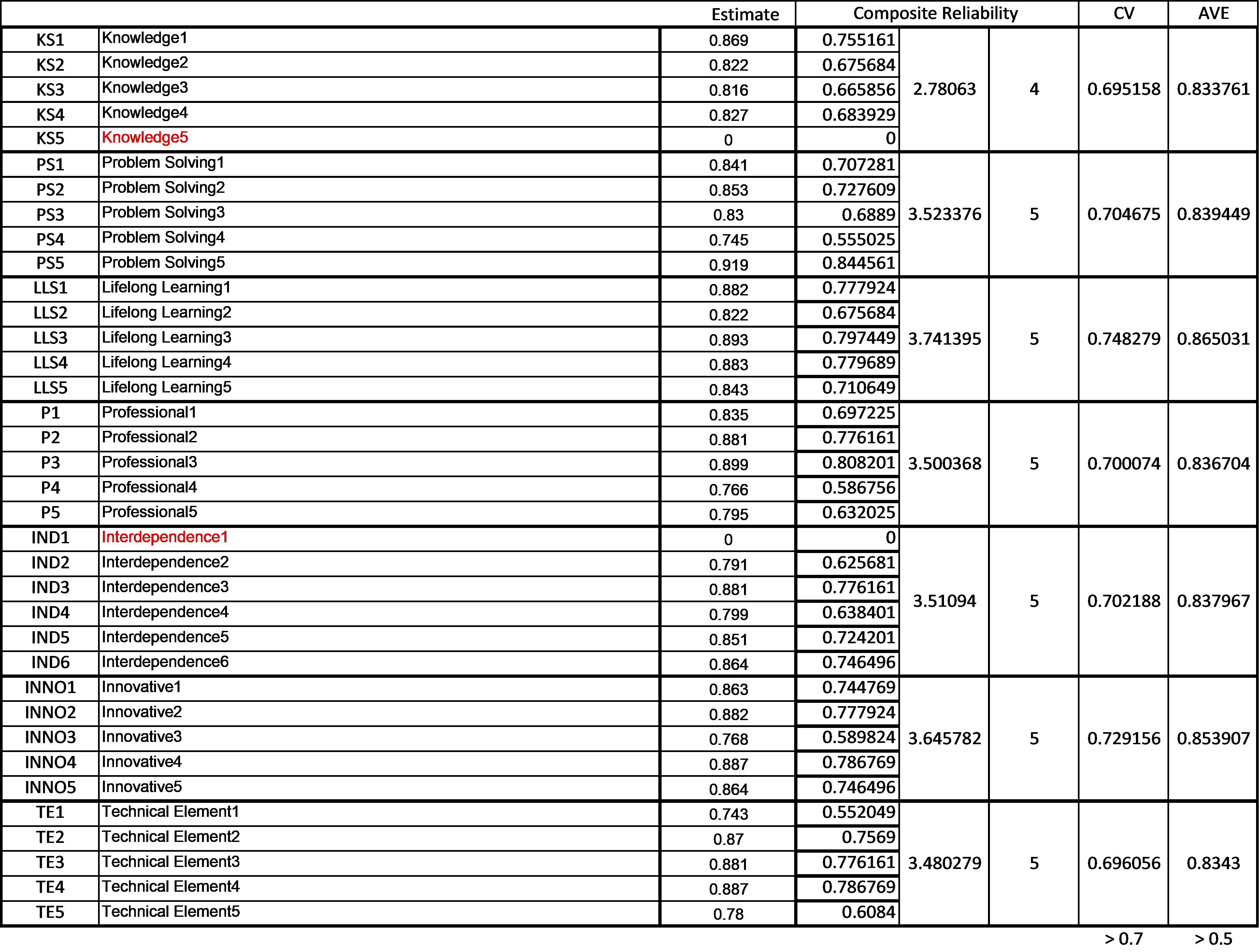

In terms of the employability sample and as can be shown in Table 6, the average variance extracted (AVE) were all above 0.5 and a few above 0.7 for composite reliability (CR). Therefore, all factors have adequate reliability and convergent validity.

Table 6: Selected confirmatory factor analysis

Figure 2: Full Structure Equation Modelling (SEM)

Based on the same criteria used for measurement model to measure the goodness-of-fit for the proposed model, the results of the fit indices for the modified run were as follows: [CMIN = 892.546; df = 463; CMIN/DF = 1.928; TLI = 0.893; CFI = 0.912; RMSEA = 0.098] indicating a very good fit of the model.

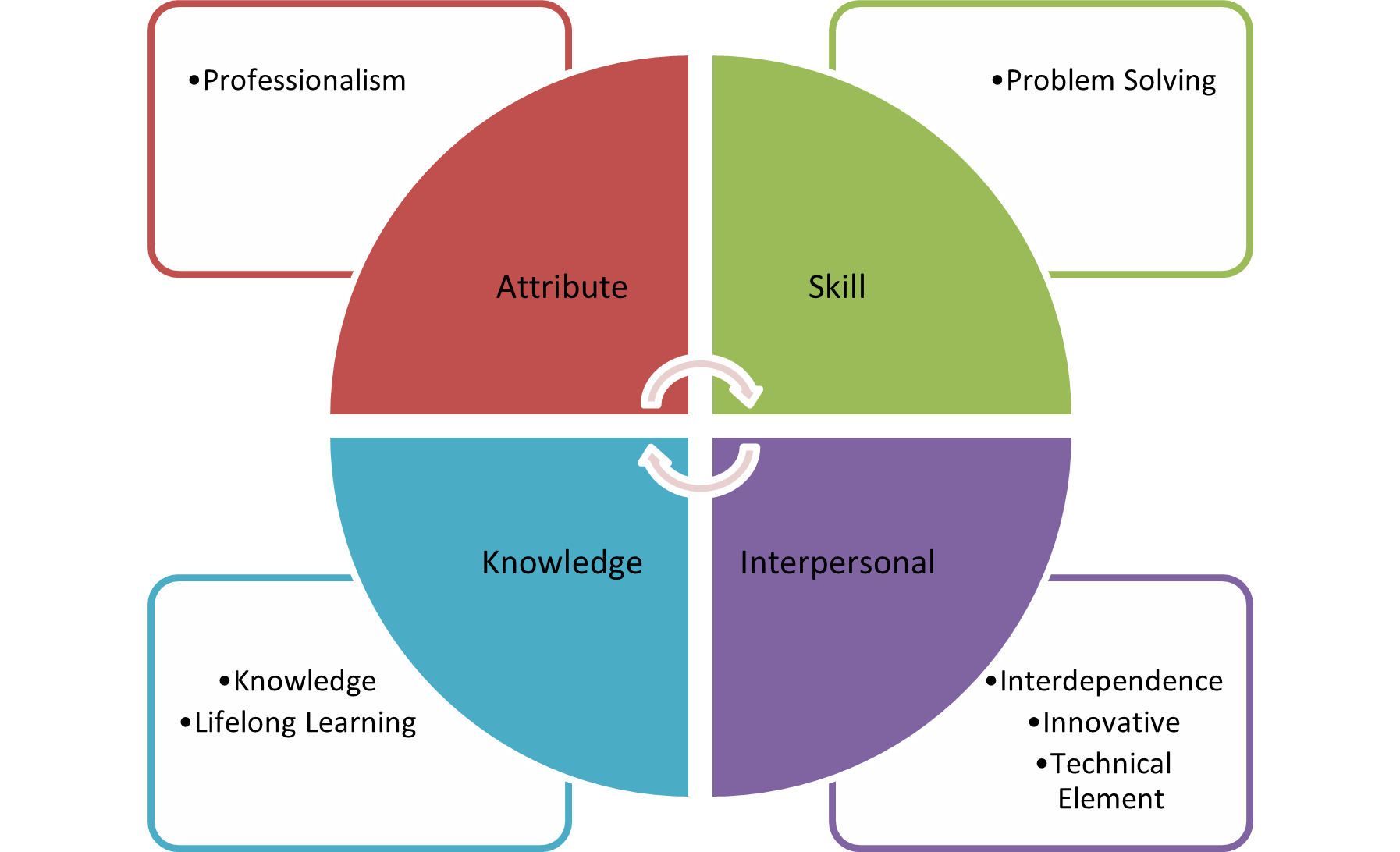

Figure 3: Sabah Engineering Graduates Employability Skills (SEGES)

4.0 Discussion

The results of the confirmatory factor analysis revealed that seven items (communication, teamwork, competence, leadership, responsibility, decision making and information technology) have to be deleted from the initial measurement model for the engineering employability skill sample. The criterion for deletion was based on the indicators that demonstrate high covariance and also had high regression weight. Having established validity and reliability of the constructs, the next step was to evaluate the structural model in order to test the hypothesised relationships among the constructs, within the proposed research model. The path model showed that 7 out of 14 of the direct hypotheses for both samples were accepted and the remaining 7 factors are rejected and not significant associated to proceed for model development. The new skills can be regrouped into; Attribute (professionalism), Skill (problem solving), Knowledge (knowledge and lifelong learning) and Interpersonal (innovative, interdependence and technical element).

References

- Abdullahi, N. J. K. (2020). Managing teaching approach in early childhood care education towards skill development in Nigeria. Southeast Asia Early Childhood Journal, 9(1), 59-74.

- Berliner, D. C. (2004a). Expert teachers: Their characteristics, development and accomplishments. In R. Batllori i Obiols, A. E Gomez Martinez, M. Oller i Freixa & J. Pages i. Blanch (eds.), De la teori a l-aula: Formacio del professorat ensenyament de las ciències socials (pp. 13-28).

- Berliner, D. C. (2004b). Describing the Behavior and Documenting the Accomplishments of Expert Teachers. Bulletin of Science, Technology & Society, 24(3).

- Bodjanova, S. (2006). Median alpha-levels of a fuzzy number. Fuzzy Sets and Systems, 157(7), 879-891.

- Bojadziev G, Bojadziev M., (2007). Fuzzy Set for Business, Finance and Management. World Scientific Publishing Co. Pte. Ltd. Singapore.

- Chang PL, Hsu CW, Chang PC. Fuzzy Delphi method for evaluating hydrogen production technologies. International Journal of Hydrogen Energy. (2011); 14172–9.

- Chang, P. T., Huang, L. C., & Lin, H. J. (2000). The Fuzzy Delphi method via fuzzy statistics and membership function fitting and an application to the human Resource. Fuzzy Sets and Systems,112(3), 511–520.

- Chang, P. L., Hsu, C. W., & Chang, P. C. (2011). Fuzzy Delphi method for evaluating hydrogen production technologies. International Journal of Hydrogen Energy, 36(21), 14172-14179.

- Cheng, C. H., & Lin, Y. (2002). Evaluating the best main battle tank using fuzzy decision theory with linguistic criteria evaluation. European journal of operational research, 142(1), 174-186.

- Hair J, Black W, Babin B, Anderson R, Tatham R. (2006). Multivariate Data Analysis (6th ed). Pearson Prentice Hall: Uppersaddle River, N.J.

- Ho, Y.F. & Chen, H.L (2007). Healthy housing rating system. Journal of Architecture, 60,115- 136.

- Hsu, Y.L., Lee, C.H. & Kreng, V.B. (2010). The application of Fuzzy Delphi Method and Fuzzy AHP in lubricant regenerative technology selection. Expert System with Application, 37, 419-425.

- Jamil, M. R. M., Hussin, Z., Noh, N. R. M., Sapar, A. A., & Alias, N. (2013). Application of Fuzzy Delphi Method in educational research. Saedah Siraj, Norlidah Alias, DeWitt,

- D. & Zaharah Hussin (Eds.), Design and developmental research, 85-92.

- Jafari, A., Jafarian, M., Zareei, A. & Zaerpour, F. (2008). Using Fuzzy Delphi Method in Maintenance Strategy Selection Problem. Journal of Uncertain System, 2(4), 289 – 298.

- Kaufmann, A., & Gupta, M.M. (1988). Fuzzy Mathematical Models in Engineering and Management Science. Elsevier Science Inc.

- Kazemi, S., Homayouni, S. M., & Jahangiri, J. (2015). A Fuzzy Delphi-Analytical Hierarchy Process Approach for Ranking of Effective Material Selection Criteria. Advances in Materials Science and Engineering, 2015. https://doi.org/10.1155/2015/845346

- Kharuddin, A. F., Kamaruddin, S. A., Kamari, M. N., Mustafa, Z., & Azid, N. (2018). Determining important factors of arithmetic skills among newborn babies’ at Malaysian taska using artificial neural network. Southeast Asia Early Childhood Journal, 7, 33-41

- Ma, Z., Shao, C., Ma, S., and Ye, Z., Constructing road safety performance indicators using Fuzzy Delphi Method and Grey Delphi Method, Expert Systems with Applications. Vol, 38, No 3, 2010, pp 1509-1514.

- Mamat, C. L. C., & Yunus, H. (2018). Early exploration of Forest School framework for Malaysian Indigenous People: The Application of Fuzzy Delphi Technique. Int. J. of Multidisciplinary and Current research, 6.

- Murray, T., Pipino, L., & Vangigch, J. (1985). A pilot study of Fuzzy set modification of Delphi. Human System Management, 5(1), 6-80.

- Kazemi, S., Homayouni, S. M., & Jahangiri, J. (2015). A Fuzzy Delphi-Analytical Hierarchy Process Approach for Ranking of Effective Material Selection Criteria. Advances in Materials Science and Engineering, 2015. https://doi.org/10.1155/2015/845346

- Mohd, R., Siraj, S., & Hussin, Z. (2018). Fuzzy Delphi Method Application in Developing Model of Malay Poem based on the Meaning of the Quran about Flora, Fauna and Sky Form 2. Jurnal Pendidikan Bahasa Melayu – JPBM (Malay Language Education Journal – MyLEJ) APLIKASI, 8(2), 57–67.

- Rahman, M. N. A., Nor, M. M., Nadzim, N. A., Radzi, N. M. M., & Moktar, N. (2017). Application of Fuzzy Delphi Approach in Designing Homeschooling Education for Early Childhood Islamic Education. International Journal of Academic Research in Business and Social Sciences, 6(12). https://doi.org/10.6007/ijarbss/v6-i12/2566.

- Shariza Said, Loh Sau Cheong, Mohd Ridhuan Mohd Jamil, Yusni Mohamad Yusop, Mohd Ibrahim K. Azeez, & Ng Poi Ni. (2014). Analisis Masalah dan Keperluan Guru Pendidikan Khas Integrasi (Masalah Pembelajaran) Peringkat Sekolah Rendah Tentang Pendidikan Seksualiti. Jurnal Pendidikan Bitara UPSI (JPBU), 7, 77- 85.

- Saedah Siraj, Norlidah Alias, Dorothy Dewitt & Zaharah Hussin. (2013). Design and developmental research: Emergent Trends in Educational Research. Pearson Malaysia.Tang, C.W. and ,

- Wu, C.T. (2010). Obtaining a picture of undergraduate education quality: a voice from inside the university, Springer. Higher Education, 60, 269-286.

- Tahriri, F., Mousavi, M., Hozhabri Haghighi, S., & Zawiah Md Dawal, S. (2014). The application of fuzzy Delphi and fuzzy inference system in supplier ranking and selection. Journal of Industrial Engineering International, 10(3). https://doi.org/10.1007/s40092-014-0066-6

- Tarmudi, Z., Muhiddin, F. A., Rossdy, M., & Tamsin, N. W. D. (2016). Fuzzy Delphi Method for evaluating effective teaching based on students’ perspective. E-Academia Journal UiTMT, 5(1), 1–10. https://doi.org/10.1017/CBO9781107415324.004.

- Thomaidis N.S and Nikitakos N. and Dounias G. (2006).The Evaluation of Information Technology Projects: a Fuzzy Multicriteria Decision-making Approach, International Journal of Information Technology and Decision Making, volume 5,pages89-122.

- Wu, D., & Tan, W. W. (2006). Genetic learning and performance evaluation of interval type-2 fuzzy logic controllers. Engineering Applications of Artificial Intelligence, 19(8), 829- 841.

- Wu, K. Y. (2011). Applying the fuzzy Delphi method to analyze the evaluation indexes for service quality after railway re-opening – Using the Old Mountain Line Railway as an example. Recent Researches in System Science – Proceedings of the 15th WSEAS International Conference on Systems, Part of the 15th WSEAS CSCC Multiconference, 474–479.

- Yusof, N. A. A. M., Siraj, S., Nor, M. M., & Ariffin, A. (2018). Fuzzy Delphi Method (fdm): Determining phase for multicultural-based model of peace education curriculum for preschool children. Journal of Research, Policy & Practice of Teachers & Teacher Education (JRPPTTE), 8(1), 5–17.

- Yusoff, N. M., & Yaakob, M. N. (2016). Analisis Fuzzy Delphi Terhadap Halangan Dalam Pelaksanaan Mobile Learning Di Institut Pendidikan Guru. Jurnal Penyelidikan Dedikasi, 11, 32–50.

- Zadeh L.A. (1965). Fuzzy sets and systems, System Theory (Fox J., ed.), Microwave Research Institute Symposia Series XV, Polytechnic Press, Brooklyn, NY, 29-37. Reprinted in Int. J. of General System.